How Agility Enhances Software Architecture

There is an antagonism between agility and software architecture that is based on a flawed model of how software architecture is created: largely as an up-front, purely intellectual exercise whose output is a set of diagrams and designs based on assumptions and poorly documented Quality Attribute Requirements (QARs). In reality, agility and software architecture are mutually reinforcing - a sound architecture helps teams build better solutions in a series of short intervals, and gradually evolving a system’s architecture helps by validating and improving it over time.

Decisions are the essence of software architecture

In an article we published on the topic of software architecture, we asserted that software architecture is all about decisions. This view is shared by others as well:

"we do not view a software architecture as a set of components and connectors, but rather as the composition of a set of architectural design decisions"- Jan Bosch and Anton Jansen (IEEE 2005)

So which decisions are architectural? In another article, we introduced the concept of a cost threshold, which is the maximum amount that an organization would be willing to pay to achieve a particular outcome or set of outcomes, expressed in present value, and based on the organization’s desired, and usually risk-adjusted, rate of return.

Architectural decisions, then, are those that might cause the cost threshold for the product to be exceeded. In other words,

“Software architecture is the set of design decisions which, if made incorrectly, may cause your project [or product] to be canceled.” - Eoin Woods (SEI, 2010)

No matter what you do, you will have an architecture…

Whether it is any good depends on your decisions. Since teams are making decisions constantly, they need to constantly ask themselves, “Will this decision we are about to make cause the cost threshold, over the lifetime of the product, to be exceeded?” For many decisions, the answer is “no”; they are free to make the decision they feel is best.

Architectural decisions tend to be focused on certain kinds of questions:

- Scalability: will the product perform acceptably when workloads increase?

- Security: will it be acceptably secure?

- Responsiveness: will it provide acceptable responsiveness to user-initiated events? Will it provide acceptable responsiveness to externally-generated events?

- Persistency: what are the throughput and structure (or lack thereof) of data that must be stored and retrieved?

- Monitoring: how will the product be instrumented so that the people who support the product can understand when it starts to fail to meet QARs and prevent critical system issues?

- Platform: how will it meet QARs related to system resource constraints such as memory, storage, event signaling, etc? For example, real-time and embedded products (such as a digital watch, or an automatic braking system) have quite different constraints than cloud-based information systems.

- User interface: how will it communicate with users? For example, virtual reality interfaces have quite different QARs than 2-dimensional graphical user interfaces, which have quite different QARs than command-line interfaces.

- Distribution: Can applications or services be relocated, or must they run in a particular environment? Can data be relocated dynamically, or does it have to reside in a particular data store location?

- Sustainability: Will the product be supportable in a cost-effective way? Can the team evaluate the quality of their architectural decisions as they develop the MVA, by adding sustainability criteria to their “Definition of Done”?

When the architecture of a system "emerges" in an unconscious way, without explicitly considering these questions, it tends to be brittle and costly to change, if change is even possible.

Even a non-decision is a decision

Postponing decisions can still have an impact because deciding not to address something now often means you are continuing to work under your existing assumptions. If these turn out to be wrong you may have to undo the work that is based on those assumptions. The relevant questions teams need to ask is “are we about to do work that, at some point, may need to be undone and redone?” If the answer is yes, you should be more mindful in making a conscious decision about the issue at hand. Rework is ok so long as you understand the potential cost and it doesn’t push you beyond your cost threshold.

In a series of prior articles, we introduced the concept of a Minimum Viable Architecture (MVA), which supports the architectural foundations of the Minimum Viable Product (MVP).

If not making an architectural decision will affect the viability of the MVP, it needs to be addressed immediately. For example if, for the MVP to be financially viable, it has to support 1000 concurrent users, then decisions related to scalability and concurrency to support this QAR need to be made. However, no decision should be made outside what is strictly required for the success proposition of the MVP.

Investing in more than is needed to support the necessary level of success of the MVP would be wasteful since that level of investment (i.e. solving those problems) may never be needed; if the MVP fails, no more investment is needed in the MVA.

Agility and software architecture

Some people believe that the architecture of a software system will emerge rather naturally as a by-product of the development work. In their view, there is no need to design an architecture as it is mostly structural, and a good structure can be developed by thoughtfully refactoring the code. Refactoring code and developing a good modular code structure is important, but well-structured code does not solve all the problems that a good architecture must solve.

Unfortunately, you only know whether something is incorrect through empiricism, which means that every architectural decision needs to be validated by running controlled experiments. This is where an agile approach helps: every development timebox (e.g. Sprint, increment, etc.) provides an opportunity to test assumptions and validate decisions. By testing and validating (or rejecting) decisions, it provides a way to limit the amount of rework caused by decisions that turn out to be incorrect. And there will always be some decisions that turn out to be incorrect.

By providing a way to empirically validate architectural decisions, an agile approach helps the team to improve the architecture of their product over time.

Agility requires making architectural decisions under extreme time pressure

In an agile approach, the development team evolves the MVP in very short cycles, measured in days or weeks rather than months, quarters, and years, as often happens in traditional development approaches. In order to ensure they can sustain the product over its desired lifespan, they must also evolve the architecture of the release (the MVA). In other words, their forecast for their work must include the work they need to do to evolve the MVA.

As implied in the previous section, this involves asking two questions:

- Will the changes to the MVP change any of the architectural decisions made by the team?

- Have they learned anything since the previous delivery of their product that will change the architectural decisions they have made?

The answer to these questions, at least initially, is usually “we don’t know yet” so the development team usually needs to do some work to answer the questions more confidently.

One way to do this is for the team to include revisiting architectural decisions as part of their Definition of Done for a release. Leaving time to make this happen usually means reducing the functional scope of a release to account for the additional architectural work.

So what does a release need to contain? First and foremost, it has to be valuable to customers by improving the outcomes they experience. Second, providing those outcomes includes an implicit commitment to support the outcomes for the life of the product. As a result, the product’s architecture must evolve along with the new outcomes it provides.

Because the team is delivering in very short cycles, it is constantly making trade-offs. Sometimes these trade-offs are between different product capabilities, and sometimes between product capabilities and architectural capabilities. These trade-offs mean that good architecture is never perfect, it’s (at best) just barely adequate.

Various forces make these trade-offs more challenging, including:

- Taking on too much scope for the increment; teams need to resist doing this and de-scope to make time for architecture work.

- Being subject to external mandates with fixed scope, e.g. new regulations; teams are tempted to sacrifice MVA in the short run, and they may need to (since regulators rarely consider achievability when they make mandates) but they need to be aware of the potential consequences and factor in the deferred work into later releases. Examples of this include intentionally incurring technical debt.

- Adopting unproven, unstable, or unmastered technology; teams doing this are committing, in the vernacular of sports, unforced errors; they need to build evaluation experiments into their release work to prove out the technology and (this is the most important part) have a backup and back-out plan if they need to revert to more proven technologies.

- Being subject to urgent business needs driven by competition, or market opportunity; they need to keep in mind that short-term “wins” that can’t be sustained because the product can’t be supported represent a kind of Pyrrhic victory that can kill a business.

As with many things, timing is everything

When making architectural decisions, teams balance two different constraints:

- If the work they do is based on assumptions that later turn out to be wrong, they will have more work to do: the work needed to undo the prior work, and the new work related to the new decision.

- They need to build things and deliver them to customers in order to test their assumptions, not just about the architecture, but also about the problems that customers experience and the suitability of different solutions to solve those problems.

No matter what, teams will have to do some rework. Minimizing rework while maximizing feedback is the central concern of the agile team. The challenge they face in each release is that they need to run experiments and validate both their understanding of what customers need but also the viability of their evolving answer to those needs. If they spend too much time focused just on the customer needs, they may find their solution is not sustainable, but if they spend too much time assessing the sustainability of the solution they may lose customers who lose patience waiting for their needs to be met.

Teams are sometimes tempted to delay decisions because they think that they will know more in the future. This isn’t automatically true, since teams usually need to run intentional experiments to gather the information they need to make decisions. Continually delaying these experiments can have a tremendous cost when work needs to be undone because prior decisions have to be reversed.

There is no such thing as a “last responsible moment”

Principles such as "delay decisions until the last responsible moment", seem to provide useful guidance to teams by giving them permission to delay decisions as long as possible. In this way of thinking, a team should delay a decision on a specific architectural design to implement scalability requirements until those requirements are reasonably known and quantified.

While the spirit of delaying design decisions until the “last responsible moment” is well-intentioned, seeking to reduce waste by reducing the amount of work done to solve problems that may never occur, the concept is challenging to implement in practice. In short, it’s almost impossible to know what is the “last responsible moment” until after it has passed. For example, scalability requirements may not be accurately quantified until after the software system has been in use for a period of time. In this instance, the architectural design may need to be refactored, perhaps several times, regardless of when decisions were made.

To address this, a team needs to give itself some options by running experiments. It may need to experiment with the limits of the scalability of its current design by pushing an early version of the system or a prototype until it fails with simulated workloads, to know where its current boundaries lie. It can then ask itself if those limits are likely to be exceeded in the real world. If the answer is, “possibly, if…” then the team will probably need to make some different decisions to expand this limit. Drastically shortening the time required to run and analyze experiments, or even eliminating them, results in decisions based on guesses, not facts. It is also unclear what “delaying decisions until the last responsible moment” would mean in this case or how it would help.

Also, most decisions are biased by the inertia of what the team already knows. For example, when selecting a programming language for delivering an MVP, a team may lean toward technologies that most team members are already familiar with, especially when delivery timeframes are tight. In these situations, avoiding delays caused by the learning curve associated with a new technology may take precedence over other selection criteria, and the tool selection decision would be made as early as possible - probably not “at the last responsible moment”.

In the end, principles that encourage us to make decisions “just in time” aren’t very helpful. Every architectural decision is a compromise. Every architectural decision will turn out to be wrong at some point and will need to be changed or reversed. Usually, teams don’t know when the “last responsible moment” is until it has already passed. Without some way of determining what the last responsible moment is, the admonition really doesn’t mean anything.

For every evolution of the MVP, re-examine the MVA

A more concrete approach helps teams know what architectural decisions they must make, and when they must make them. Developing and delivering a product in a series of releases, each of which builds upon the previous one and expands the capabilities of the product, can be thought of as a series of incremental changes to the MVP.

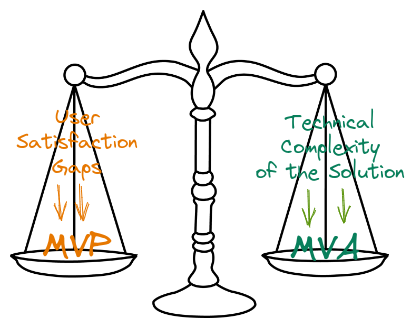

Various forces influence investments in MVPs versus MVAs. MVPs grow because of the need to respond to large user satisfaction gaps, while MVAs grow due to increases in the technical complexity of the solution needed to support the MVP. Teams must balance these two sets of forces in order to develop successful and sustainable solutions (see Figure 1).

Figure 1: Balancing MVP and MVA releases is critical for Agile Architecture

This is a slightly different way of looking at the MVP. Many people think of the MVP as only the first release, but we think of each release as an evolution of the MVP, an incremental change in its capabilities, and one that still focuses on a minimal extension of the current MVP. With an agile approach, there is no point at which “minimum viability” as a concept is abandoned.

With each MVP delivery, there are several possible outcomes:

- The MVP is successful and the MVA doesn’t need to change;

- The MVP is successful but the MVA isn’t sustainable;

- The MVP is partially but not wholly successful, but it can be fixed;

- The MVA is also partially but not wholly successful and needs improvement;

- The MVP isn’t successful and so the MVA doesn’t matter

Of these scenarios, the first is what everyone dreams of but it’s rarely encountered. Scenarios 2-4 are more likely - partial success, but significant work remains. The final scenario means going back to the drawing board, usually because the organization’s understanding of customer needs was significantly lacking.

In the most likely scenarios, as the MVP is expanded, and as more capabilities are added, the MVA also needs to be evolved. The MVA may need to change because of new capabilities that the MVP takes on: some additional architectural decisions may need to be made, or some previous decisions may need to be reversed. The team’s work on each release is a balance between MVP and MVA work.

How can we achieve this balance? Both the MVP and the MVA include trade-offs to solving different kinds of problems. For the MVP, the problem is delivering valuable customer outcomes, while for the MVA, the problem is delivering those outcomes sustainably.

Teams have to resist two temptations regarding the MVA: the first is ignoring its long-term value altogether and focusing only on quickly delivering functional product capabilities using a “throw-away” MVA. The second temptation they must resist is over-architecting the MVA to solve problems they may never encounter. This latter temptation bedevils traditional teams, who are under the illusion that they have the luxury of time, while the first temptation usually afflicts agile teams, who never seem to have enough time for the work they take on.

Dealing with rework through anticipation

Rework is, at its core, caused by having to undo decisions that have been made in the past. Rework is inevitable, but as it is more expensive than “simple” work because it includes undoing previous work, it’s worth taking time to reduce it as much as possible. This means, where possible, anticipating future rework and taking measures to make it easier when it does happen.

Modular code helps reduce rework by localizing changes and preventing them from rippling throughout the code base. Localizing dependencies on particular technologies and commercial products makes it easier to replace them later. Designing to make everything easily replaceable, as much as possible, is one way to limit rework. As with over-architecting to solve problems that never occur, developers need to look at the likelihood that a particular technology or product may need to be replaced. For example, would it be possible to port the MVA to a different commercial cloud or even move it back in-house if the system costs outweigh the benefits of the MVP? When in doubt, isolate.

Rework is triggered by learning new things that invalidate prior decisions. As a result, when reviewing the results from an MVP release, teams can ask themselves if they learned anything that tells them that they need to revisit some prior decision. If so, their next question should be whether that issue needs to be addressed in the next release because it is somehow related to the next release’s MVP. When that’s the case, the team will need to make room for the rework when they scope the rest of the work.

Sometimes this means that the MVP needs to be less aggressive in order to make room in the release cycle (e.g. Sprint or increment) to deal with architectural issues, which may not be an easy sell to some of the stakeholders. To that point, keeping all stakeholders apprised of the trade-offs and their rationale at every stage of the design and delivery of the MVP helps to facilitate important conversations when the team feels they need to spend time reducing technical debt or improving the MVA instead of delivering a “quick and dirty” MVP.

The same thing is true for dealing with new architectural issues: As the team looks at the goals for the release of the MVP, they need to ask themselves what architectural decisions need to be made to ensure that the product will remain sustainable over time.

Conclusion

No matter what you do, you will end up with an architecture. Whether it is good or bad depends on your architectural decisions and their timing. For us, a “good” architecture is one that meets its QARs over its lifetime. Because systems are constantly changing as they evolve, this “architectural goodness” also constantly changes and evolves.

In an agile approach, teams develop and grow the architecture of their products continuously. Each release involves making decisions and trade-offs, informed by experiments that the team conducts to test the limits of their decisions. To frame evolving the architecture as an either-or trade-off with developing functional aspects of the product is a false dichotomy; teams must do both, but achieving a balance between the two is more art than science.

There really is no such thing as a “last responsible moment” for architectural decisions, as that moment can only be determined ex post facto. Using the evolution of the MVP as a way to examine when decisions need to be made provides more concrete guidance on when decisions need to be made. In this context, the goal of the MVP is to determine whether its benefits result in improved outcomes for the customers or users of the product, while the goal of the MVA is to ensure that the product will be able to sustain those benefits over the full lifespan of the product.

A big challenge teams face is how to do this under extreme time pressure. Developing a system in short intervals is challenging by itself, and adding “extra” architectural work adds complexity that teams can struggle to overcome. Using decisions about the MVP to guide architectural decisions can help teams decide what parts need to be built with the future in mind, and what parts can be considered “throw-aways”.

Finally, we should assume that every architectural decision will need to be undone at some point. Make options for yourself and design for replaceability. Isolate decisions by modularizing code and using abstraction and encapsulation to reduce the cost of undoing decisions.

.png)